I thought about love when economist Tyler Cowen interviewed Will MacAskill recently. MacAskill is the de facto philosophical leader of Effective Altruism, or EA. (If you’re unfamiliar with the scene, I thought this was a good profile of MacAskill and EA.)

In the interview, Cowen and MacAskill talk about “partiality,” a term they use to mean love or affection. Cowen prods MacAskill to explain why he thinks partiality is unhelpful for moral reasoning, and MacAskill is happy to explain. The result is a clarifying exchange. Rather than trying to develop universe-scale, utilitarian models moral reasoning, asks Cowen, why can’t we accept that our morals are always grounded in our existing loves?

Cowen: “It’s not that there’s some other theory that’s going to tie up all the conundrums in a nice bundle, but simply that there are limits to moral reasoning, and we cannot fully transcend the notion of being partial because moral reasoning is embedded in that context of being partial about some things.”

MacAskill: “I think we should be more ambitious than that with our moral reasoning.”

For MacAskill, loving things is epistemically dangerous. Intrinsically, it clouds your judgment about what is morally valuable, and constrains you to moral reasoning that isn’t very ambitious. It doesn’t seem unfair to say that MacAskill thinks we would be better moral agents if we loved less.

For what it’s worth, I think Cowen has already ceded too much ground to utilitarianism by talking about love as “partiality.” If you start from the position that loves and affections are an obstacle to a more accurate, objective understanding of morality, you’re fighting on hostile ground. Essayist Phil Christman put it well:

Of course, basing one’s morality around the things one already loves isn’t a full solution: we love all kinds of things that we maybe shouldn’t, and sometimes don’t truly love things we know have great moral value. An older approach to this problem could talk about ordered and disordered loves, about a human telos or purpose, or about the Good as something beyond aggregated preferences. That’s too much to tackle here - but I’m not convinced effective altruism has tools to talk about these things.

Reading

Mary Harrington has a new book coming out, on women’s rights in a biotech age. She’s a spiky, original thinker, and this summer I’ve repeatedly been drawn back to her writing, especially “Our Humanity Depends on the Things We Don’t Sell.”

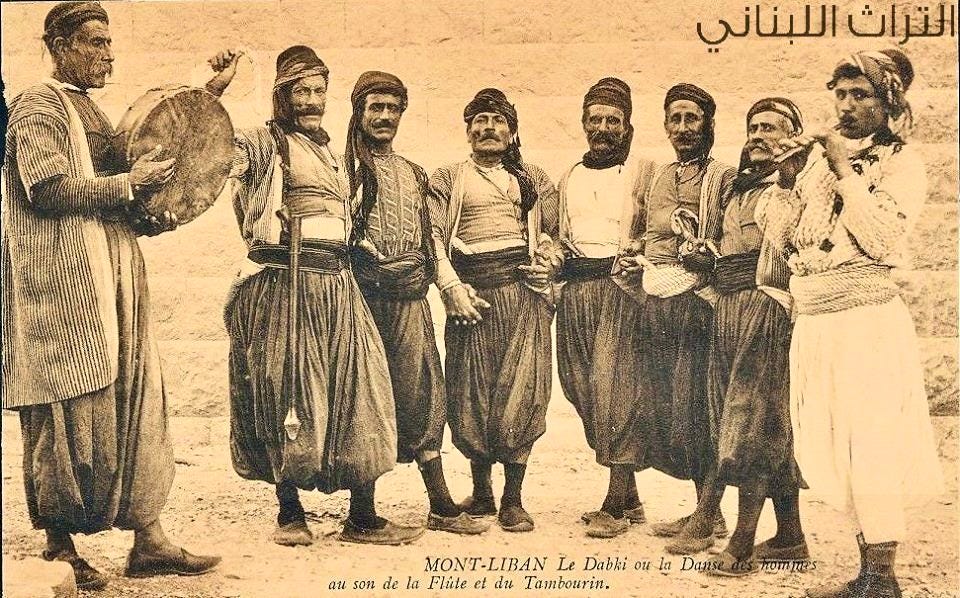

Dabke, the Arabic folk dance, gets a nice video essay treatment here.

This summer, I helped run a residency program for tech research, broadly defined. More to come, but the most visually striking project was Wendi Yan’s mammoth world-building exhibition, which touched on de-extinction, Arctic mythology, alternate history, deep time, and some glass blowing.

For Spanish speaking Regress Studies readers (Regressives? Regressians? Regretes?), yung Peruvian icon Brunella dropped a glorious personal essay on the nonprofit-Young Founder-CEO-savant complex.

Your Real Biological Clock Is You’re Going to Die.

For large swaths of Western history, only the wealthy cooked at home, in what this essayist calls The Social Inversion of Eating Out.

Science & Tech Policy

Geothermal energy is quite possibly America’s future - I’ve written about this before. Austin Vernon breaks down the specific technological barriers to extracting more heat from rocks.

Aside from technological barriers, the main reason you can’t build new clean power sources in the U.S. is NEPA, the National Environmental Policy Act. NEPA creates procedural barriers to any new federal project, or private projects on federal land, without mandating any environmental protections. It is the worst thing in the world. At Construction Physics, Brian Potter explains how it works in practice (it takes 4.5 years on average for federal agencies to complete an environmental impact statement on one project). Also, I sometimes work with Brian on this newsletter, and you should consider subscribing.

Casey Handmer outlines why forest fires in America are fundamentally a political problem:

Nudging doesn’t replicate after adjusting for publication bias.

EA reflects too much on the nature of the E and not enough on the A. I'm partial to your points here.

I think the concept behind nudging is sound even if the way it's been studied don't replicate. It's as simple as changing the default option from one choice to another, which is widely accepted as significantly changing the ultimate user choice. I think this falls under the original book's definition of nudging as "… any aspect of the choice architecture that alters people’s behavior in a predictable way without forbidding any options or significantly changing their economic incentives.”